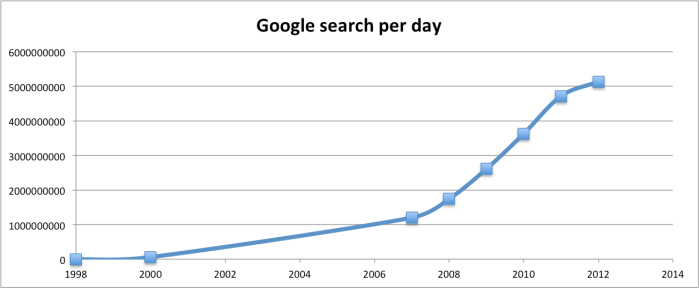

In the year 1998 Google handled 9800 average search queries every day. In 2012 this number shot up to 5.13 billion average searches per day. The graph given below shows this astronomical growth.

| Year | Average Search per day |

| 1998 | 9800 |

| 2000 | 60000000 |

| 2007 | 1200000000 |

| 2008 | 1745000000 |

| 2009 | 2610000000 |

| 2010 | 3627000000 |

| 2011 | 4717000000 |

| 2012 | 5134000000 |

The major reason for google’s success is because of its pageRank algorithm. PageRank determines how trustworthy and reputable a given website is. But there is also another part. The input query entered by the user should be used to match the relevant documents and score them. In this post I will focus on the second part. I have created 3 documents to show how this works. Take some time to go through them.

Document 1: The game of life is a game of everlasting learning Document 2: The unexamined life is not worth living Document 3: Never stop learning

Let us imagine that you are doing a search on these documents with the following query: life learning

The query is a free text query. It means a query in which the terms of the query are typed freeform into the search interface, without any connecting search operators.

Let us go over each step in detail to see how it all works.

Step 1: Term Frequency (TF)

Term Frequency also known as TF measures the number of times a term (word) occurs in a document. Given below are the terms and their frequency on each of the document.

TF for Document 1

| Document1 | the | game | of | life | is | a | everlasting | learning |

| Term Frequency | 1 | 2 | 2 | 1 | 1 | 1 | 1 | 1 |

TF for Document 2

| Document2 | the | unexamined | life | is | not | worth | living |

| Term Frequency | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

TF for Document 3

| Document3 | never | stop | learning |

| Term Frequency | 1 | 1 | 1 |

In reality each document will be of different size. On a large document the frequency of the terms will be much higher than the smaller ones. Hence we need to normalize the document based on its size. A simple trick is to divide the term frequency by the total number of terms. For example in Document 1 the term game occurs two times. The total number of terms in the document is 10. Hence the normalized term frequency is 2 / 10 = 0.2. Given below are the normalized term frequency for all the documents.

Normalized TF for Document 1

| Document1 | the | game | of | life | is | a | everlasting | learning |

| Normalized TF | 0.1 | 0.2 | 0.2 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 |

Normalized TF for Document 2

| Document2 | the | unexamined | life | is | not | worth | living |

| Normalized TF | 0.142857 | 0.142857 | 0.142857 | 0.142857 | 0.142857 | 0.142857 | 0.142857 |

Normalized TF for Document 3

| Document3 | never | stop | learning |

| Normalized TF | 0.333333 | 0.333333 | 0.333333 |

Given below is the code in python which will do the normalized TF calculation.

def termFrequency(term, document): normalizeDocument = document.lower().split() return normalizeDocument.count(term.lower()) / float(len(normalizeDocument))

Step 2: Inverse Document Frequency (IDF)

The main purpose of doing a search is to find out relevant documents matching the query. In the first step all terms are considered equally important. In fact certain terms that occur too frequently have little power in determining the relevance. We need a way to weigh down the effects of too frequently occurring terms. Also the terms that occur less in the document can be more relevant. We need a way to weigh up the effects of less frequently occurring terms. Logarithms helps us to solve this problem.

Let us compute IDF for the term game

IDF(game) = 1 + loge(Total Number Of Documents / Number Of Documents with term game in it)

There are 3 documents in all = Document1, Document2, Document3

The term game appears in Document1

IDF(game) = 1 + loge(3 / 1)

= 1 + 1.098726209

= 2.098726209

Given below is the IDF for terms occurring in all the documents. Since the terms: the, life, is, learning occurs in 2 out of 3 documents they have a lower score compared to the other terms that appear in only one document.

| Terms | IDF |

| the | 1.405507153 |

| game | 2.098726209 |

| of | 2.098726209 |

| life | 1.405507153 |

| is | 1.405507153 |

| a | 2.098726209 |

| everlasting | 2.098726209 |

| learning | 1.405507153 |

| unexamined | 2.098726209 |

| not | 2.098726209 |

| worth | 2.098726209 |

| living | 2.098726209 |

| never | 2.098726209 |

| stop | 2.098726209 |

Given below is the python code to calculate IDF

def inverseDocumentFrequency(term, allDocuments): numDocumentsWithThisTerm = 0 for doc in allDocuments: if term.lower() in allDocuments[doc].lower().split(): numDocumentsWithThisTerm = numDocumentsWithThisTerm + 1 if numDocumentsWithThisTerm > 0: return 1.0 + log(float(len(allDocuments)) / numDocumentsWithThisTerm) else: return 1.0

Step 3: TF * IDF

Remember we are trying to find out relevant documents for the query: life learning

For each term in the query multiply its normalized term frequency with its IDF on each document. In Document1 for the term life the normalized term frequency is 0.1 and its IDF is 1.405507153. Multiplying them together we get 0.140550715 (0.1 * 1.405507153). Given below is TF * IDF calculations for life and learning in all the documents.

| Document1 | Document2 | Document3 | |

| life | 0.140550715 | 0.200786736 | 0 |

| learning | 0.140550715 | 0 | 0.468502384 |

Step 4: Vector Space Model – Cosine Similarity

From each document we derive a vector. If you need some refresher on vector refer here. The set of documents in a collection then is viewed as a set of vectors in a vector space. Each term will have its own axis. Using the formula given below we can find out the similarity between any two documents.

Cosine Similarity (d1, d2) = Dot product(d1, d2) / ||d1|| * ||d2|| Dot product (d1,d2) = d1[0] * d2[0] + d1[1] * d2[1] * … * d1[n] * d2[n] ||d1|| = square root(d1[0]2 + d1[1]2 + ... + d1[n]2) ||d2|| = square root(d2[0]2 + d2[1]2 + ... + d2[n]2)

Vectors deals only with numbers. In this example we are dealing with text documents. This was the reason why we used TF and IDF to convert text into numbers so that it can be represented by a vector.

Refer to the link for detailed explanation of cosine similarity and vector space model. I found the following explanation in the book Mahout in Action very useful.

The cosine measure similarity is another similarity metric that depends on envisioning user preferences as points in space. Hold in mind the image of user preferences as points in an n-dimensional space. Now imagine two lines from the origin, or point (0,0,…,0), to each of these two points. When two users are similar, they’ll have similar ratings, and so will be relatively close in space—at least, they’ll be in roughly the same direction from the origin. The angle formed between these two lines will be relatively small. In contrast, when the two users are dissimilar, their points will be distant, and likely in different directions from the origin, forming a wide angle. This angle can be used as the basis for a similarity metric in the same way that the Euclidean distance was used to form a similarity metric. In this case, the cosine of the angle leads to a similarity value. If you’re rusty on trigonometry, all you need to remember to understand this is that the cosine value is always between –1 and 1: the cosine of a small angle is near 1, and the cosine of a large angle near 180 degrees is close to –1. This is good, because small angles should map to high similarity, near 1, and large angles should map to near –1.

The query entered by the user can also be represented as a vector. We will calculate the TF*IDF for the query

| TF | IDF | TF*IDF | |

| life | 0.5 | 1.405507153 | 0.702753576 |

| learning | 0.5 | 1.405507153 | 0.702753576 |

Let us now calculate the cosine similarity of the query and Document1. You can do the calculation using this tool.

Cosine Similarity(Query,Document1) = Dot product(Query, Document1) / ||Query|| * ||Document1||

Dot product(Query, Document1)

= ((0.702753576) * (0.140550715) + (0.702753576)*(0.140550715))

= 0.197545035151

||Query|| = sqrt((0.702753576)2 + (0.702753576)2) = 0.993843638185

||Document1|| = sqrt((0.140550715)2 + (0.140550715)2) = 0.198768727354

Cosine Similarity(Query, Document) = 0.197545035151 / (0.993843638185) * (0.198768727354)

= 0.197545035151 / 0.197545035151

= 1

Given below is the similarity scores for all the documents and the query

| Document1 | Document2 | Document3 | |

| Cosine Similarity | 1 | 0.707106781 | 0.707106781 |

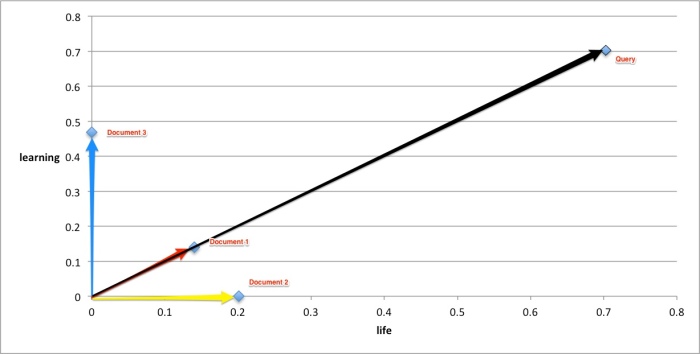

I plotted vector values for the query and documents in 2-dimensional space of life and learning. Document1 has the highest score of 1. This is not surprising as it has both the terms life and learning.

Hey,

Thanks for the awesome article very helpful ..

why is 0.5 of Tf(life) and Tf(learning)? I can not understand

Sam,

In the search query ‘life learning’ each term occurs one. There are two terms in total. Hence the TF(life) and TF(learning) is 1/2 or 0.5

Regards,

Jana

previous comment was not posted correctly, I uploaded it here http://www.speedyshare.com/FESse/TextSimilarity.rar

Sam,

The link is asking me to download an executable file and I do not have Windows operating system. Can you upload the file in box.com and share the link to me.

Regards,

Jana

https://app.box.com/s/zcyymwe9ld319v1semhw I uploaded it here

Jana Vembunarayanan,

Have you check it?

Sam,

I am not familiar with c# and do not have an environment to run your code. Hence I am not able to find any issues with your code.

The best way to troubleshoot is to print out the values for tf, idf and their products separately and see if it differs from my calculations.

I did all the calculations manually while writing this post to explain the core of the concept. May be your code is correct and my manual calculations could have a bug.

Regards,

Jana

Jana Vembunarayanan,

ok, I’ll explain what I have written…

well, I take this 3 document, split and convert it to string array.

Than each array I calculate:

TF – which is number of times a word occurs in a document / whole word’s count of document.

IDF – log(Total Number Of Documents / Number Of Documents with term game in it) as you said.

and at last Weight = TF * IDF.

After all of this done I have term’s weight in each document. Now I want to use Cosine similarity function.

In CalculateCosineSimilarity method I assign two parameters double[ ] vecA, double[ ] vecB, which is weights of Document1 and Document2. Than I calculate dot product, magnitudeOfA and magnitudeOfB and at last calculate it: Cos = dotProduct / (magnitudeOfA * magnitudeOfB): this is all about code. Is it correct what I have written?

Sam,

1. For IDF calculation you need to add 1 to the log(Total Number Of Documents / Number Of Documents with term game in it) as log(1) = 0

2. You need to compute the dot product between the document vector and the query vector and not between documents.

Regards,

Jana

Jana Vembunarayanan,

I did not understand this part(about query) well…

In your example user enters some text and than comparing it to this documents, is not it?

In my case I want to compare one of this 3 document to each other. for example want to compare Document1 to Document2 and Document3, and query what will be in this case? Document1?

Sam,

I got it and looks correct to me.

Regards,

Jana

Thnx for this awesome article sir. It was quite helpful but just having a bit of a trouble in how you created document vectors after tf-idf value. I am sorry if i asked something silly but was having trouble in it.

Regards,

Shivam

Shivam,

Thanks. The output from (Step 3: TF * IDF) is the vector for each document.

Using this we compute the cosine on the query vector.

Regards,

Jana

ok i get it, Thank u again 🙂

Regards,

Shivam

Hi,

Thanks for the awesome article. It was really helpful. Although, I have question. In case my query contains a word which does not exist in any of the documents(say “electronics”) , how should I calculate the IDF score for “electronics” ?

I need the IDF score for “electronics” to calculate ||Query|| while I am computing the similarity between the Query and say Document 1.

Himanshu,

If none of the documents has the term then set its IDF to zero.

Regards,

Jana

Hi Jana,

That will be a good thing to do, but I seem to observe your algorithm does otherwise:

if numDocumentsWithThisTerm > 0:

return 1.0 + log(float(len(allDocuments)) / numDocumentsWithThisTerm)

else:

return 1.0

Anyhow, it doesn’t matter in the results of the query after all I guess. (Correct me from wrong.)

Regards,

Jerôme

frikking brilliant, man. God bless you.

Hi Jana, thank you very much for this brilliant article!

I have a question about the formula you wrote:

Cosine Similarity(Query,Document1) = Dot product(Query, Document1) / ||Query|| * ||Document1||

And particularly about the way you compute ||Document1||:

||Document1|| = sqrt((0.140550715)**2 + (0.140550715)**2) = 0.198768727354

If I understand correctly, you are summing over the tf*idf values (relative to Document1) of the terms that are present in both Document1 and Query (“life” and “learning”). However, from the formula on Wikipedia (http://en.wikipedia.org/wiki/Cosine_similarity#Definition) I understand that the norm of Document1 should be obtained summing over the TF-IDF values of all its terms (“the”, “game”, “of”, “life”, etc…).

I am pretty sure I am missing something out here, can you help me out with this please?

Thanks!

Dario

Dario,

You are correct as the formula for calculating norm should include all the values.

Take a look at the code from lenskit.

CosineSimilarity – http://goo.gl/2VqDOS

Norm calculation – http://goo.gl/Fnev40

Regards

Jana

Ok, good to know… Thanks a lot!

As in, you’re dividing the dot product by a larger norm, and thus you’d not get 1 (or am I really confused?)

Nice post, In the regard to your comment and Dario’s, calculating the norm of each document would entail just to sum each row of the tfidf matrix. right? What seems odd to me is that when I do that (having used l2 norm in the scikit-learn Tfidfvectorizer module), the normalised vector is greater than 1. What am I missing?

Hi Jana, thanks for the nice article. I understood how you have calculated the TF of query terms (Life and learning). But, unfortunately I haven’t got how you calculated the IDF for the query terms. Could you please explain it?

Hi Jana, I found many articles about tf-idf and cosine similarity but your is the best, good explanation with examples is exactly what I needed. Thank you very much for your time to write this great article. I wish you luck in your next work!

Best regards, Jerry

Brilliant article Sir. Thanks !

I have a small doubt : while calculating the idf for query vector how come 1.4055 comes? Shouldn’t we consider query as a single document .? In that case 1+log( 1/1) = 1 right? Please clarify this .

Good explanation!

But that happens if we had a query with only one term?

Angle between two one-dimensional vectors not even defined. It seems like the whole vector-space model doesn’t give a solution for such cases. Yet, one-term queries ain’t esoteric at all.

The normalized query vector becomes the unity vector [1.0]

The normalized document vector may be either [1.0] for documents that have this term, or [0.0] for documents that don’t have it.

We end up with binary rating, neglecting the tf ratings in documents, without ability to sort the results or get the top K of them….

Actually, this corner case may appear in multi-word queries as well. Repeating the same term many times in the query wouldn’t make a difference here. Having query with multiple distinct terms, where only one of them stands for real known term, would bring similar results. Because if some term isn’t indexed, we wouldn’t compute 1.0 + log(0) which isn’t defined, but rather force it to be 0.0.

Regards,

Alexei

Dear Jana and Alexei,

Thanks to your article the algoritm has really became clear to me. Great explaination, thanks!

Alexei, I’m also curious about the same question!

I was thinking about it, and it seems that this is exacty the reason why document 2 and 3 have the same score as well. For relevance to the query only the angular similarity is taken in account. This could be implemented, since the table in step 3 already shows a greater match for doc 3 than for doc 2, in a way.

I think this is not something like a bug, but more a choice of implementation. Therefore my question will be why, if I may.

Regards,

Jerôme

Nice explanation. Thanks.

Such a perfect article that exactly what I have been looking for !!! God bless you !!

Hi every one, I’ve read this article and I feel such a perfect. Now I want to have a program completely that it was writen in Python. God bless you and thank you so much!

Please get me a program completely to I can refer it.

thank you very much!

Hi Jana Vembunarayanan and everyone

how to calculate similarity between a query and documents using Python program

I have a set of documents and i have calculate both

1)Term -Frequency

2)Inverse-Frequency

3)TF/IDF

Now i need to calculate the similarity between a specific query (input from user) and a document (input from a file.txt) which will produce a score that will rank the document from the highest similarity to the lowest similarity towards the query.

Can anyone guide me ? I just need to know how to proceed from my current progress.

thanks

Hello Jana Vembunarayanan

Very good article, easy to understand.

One doubt is that about cosine similartiy for document 2 because answer is showing 0.707106781

But life value becomes 0.20078 and learning =0

so answer become 0. May be i am wrong.

Can you explain for document 2

May be i am wrong

Thanks

Hello thanks for the article, how would you calculate Tf of query if query had two or more similiar words i.e { life, life, learning} for the word life would it be 2/2 or 1/3

Reblogged this on Talking Crap Blog.

Hello Sir,here you have explained how to find the cosine similarity between a query and a document.So by using the above same process can I find the cosine similarity between two documents using texts.

Thanks

Hello this is really nice explanation. if you have java code which executed correctly and give correct result so please give it to me.

thank you.. finally found a good explanation

I tried my own example I get Cosine Similarity(Query, Document) divided by zero, so what to do??

In termFrequency function the term and document is asked …I don’t have an idea for that.. pls explain

what is term .

Which tool do you used to plot the vectors?

Excel sheet.

Regards,

Jana

I tried Excel to plot vectors but its not work like yours

Hesham,

There are 3 charts. Which one are you referring to? If it’s the 2nd one then I got the image (not excel) and annotated it with life/learning.

Regards,

Jana

The third chart

Hesham,

I use Microsoft Excel and annotated the image using Preview software.

Regards,

Jana

Hello, Thanks for a very nice explanation. I am working on this project where I identify different books/short stories with various features such as alliteration, consonance and topics obtained by a topic modelling software (mallet) so what I have is a feature matrix where rows form the documents and each of the columns are features so Column1 = alliteration Score (normalized over the length of the book), Column2 = consonance Score, and Column3 = Topic1 frequence, and Col4 = Top 2 frequency et cetera. All the values usually range between 0 and 1. So, I am using these values instead of the tf-idf scores. Do you think something like cosine similarity could still work on it?

Use any suitable clustering method say M as long as the method M works on pure Plain Text, language-independently. Thus, M must work anyway, regardless of whether the texts are French, Italian, whatsoever:

—> Extract the ‘pure’ medieval plain text out of the tagged MOM charters, which gives a large set S of ‘pure’ texts.

—> On all documents in S, apply your spelling normalization, which yields a normalized set S’.

—> Then use the chosen method M to make clusters in S, obtaining a clustering result C.

—> Then use the same method M to make clusters in S’, obtaining a clustering results C’.

—> Finally compare if there are statistically significant differences between C and C’.

For this task can I use TF-IDF, a method I am saying M?

-> can any one provide code for TF IDF and cosine similarity in matlab

This article is so informative and straightforward. Thanks a lot. You even included additional mathematical reference documents. So good!!!

can someone explain the IDF calculation of Query-words. what is total number of documents ?

if it is one(i.e only query document) then ln(1)=0.

Hello.

i need help in calculating the Tf-idf scores.i have ten text files,and i need to calculate the Tf-Idf values of all the words presenting in all the documents and display their values in document wise.i’ve tried it using JAVA with ArrayLists,but i was getting a lot of errors while calculating tfidf score for all the words in document wise.

In case there is only one document TF*IDF=TF. ….coz IDF=1 for all terms.

Hello,

What is best way to identify similar documents – is it TF_IDF matrix consine similarity or Latent semantic analysis/indexing cosine similarity? What advantage does Latent semantic analysis have? Any good links and examples please provide. Does LSM/LSI have better performance and how is rank of matrix chosen for SVD? Does LSM/LSI solve issue like – assume we are searching documents for financial “BANK” but we are getting documents related to medical blood “BANK”.

Thanks

This has been very helpful. I’ve read it again and again. But why will you use 1+log(..) for your idf? I believe since a term occurs in at least in 1 document, then you don’t need to add one. What do you think?

Thank you

Superb article with good illustrations. Will be very helpful to students

Thank you so much sir! Great article! Very clear and understandable even to a beginner!

hello sir,I need cosine similarity multi document text summarization related IEEE ppr after 2012 could u give me??

hello sir,I need cosine simmilarity MSD related IEEE Pprs afer 2012 pls sir could yuo give me??

Can you tell me how to derive cosine similarity formula?

Hi

How is the value IDF below 1.405507173 for the query. There is one document

Shouldnt this be 1+log(1/1) = 1 ?

life 0.5 1.405507153 0.702753576

learning 0.5 1.405507153 0.702753576

Reblogged this on Duong Trung Nghia.

Dear Jana,

I have the same question as asked above by Chintan.

One doubt is that about cosine similartiy for document 2 because answer is showing 0.707106781

But life value becomes 0.20078 and learning =0

so answer become 0.

Can you explain for document 2

how the cosine similarity graph done for query and document? i.e last graph of this article

Because log(0) is invalid, so please correct the formula to:

IDF = log(1+Total Number Of Documents / Number Of Documents with a term)

can i please get the python code for implementing cosine similarity for two text files.